Kubernetes CRL support with the front-proxy-client and Haproxy

“Why does kube-apiserver not take a CRL file?”

Kubernetes clusters at OSSO are usually setup using PKI infrastructure from which we create client certificates for users as well.

Unfortunately, users can sometimes be a little careless [citation needed] and sometimes they manage to share their keys with the world. PKI caters for lost certificates through the issuance of periodic (or ad-hoc) Certificate Revocation Lists (CRLs), in which the PKI admins can place certificates that have been compromised: if a certificate is listed in the CRL, it is revoked, and will not be accepted by the TLS agent.

At the moment of writing, there is no support for CRL or for its cousin OSCP in Kubernetes. There are plenty of requests for this feature, but since 2015, nothing concrete has emerged.

For our infrastructure, which relies heavily on client certificates, operating without the possibility to revoke them was not an option anymore.

There is one possible workaround: reconfiguring RBAC rules, making sure that user (CN) and group (O) have no role bindings anymore. But that disallows reuse of the same commonName when the user “lost” their certificate and simply wants a new one.

No, we want CRLs.

CRL by support using Haproxy

There are applications available that do support CRL. One of these

is Haproxy, a tool we already use in our setups. Haproxy can work as a

TCP proxy, transparently forwarding the TLS. Or it can terminate

TLS traffic. For our purposes the TLS proxy is perfect, because it

can verify the validity of certificates (ca-file) and check them against

the CRL (crl-file). If the certificate is invalid or revoked, the

TLS handshake aborts.

Using the Kubernetes Aggregation Layer

Using a TLS proxy creates one problem: Haproxy does not have the original client’s key. So it cannot connect to the kube-apiserver using their credentials. This means that the kube-apiserver will not recognise the user – “Hey it’s John!” – but will recognise Haproxy – “You again? You seem to be the only one that talks to me.”

For Haproxy it would be pointless to have RBAC rules: everyone would get the same permissions. To work around this we can reuse the Kubernetes Aggregation Layer setup:

We let Haproxy reuse the front-proxy-client client certificate

when forwarding the requests to Kubernetes. Haproxy extracts the

user (CN) and group (O) from the supplied client certificates and puts

them in request headers X-Remote-{User,Group} before forwarding the

request to the Kubernetes apiserver. Kubernetes is then able to

authenticate and authorize the request using RBAC.

The Kubernetes Aggregation Layer uses the same mechanism: its trusted certificate is allowed to “impersonate” another user. It can call back to the Kubernetes apiserver with requests authorized as the original user.

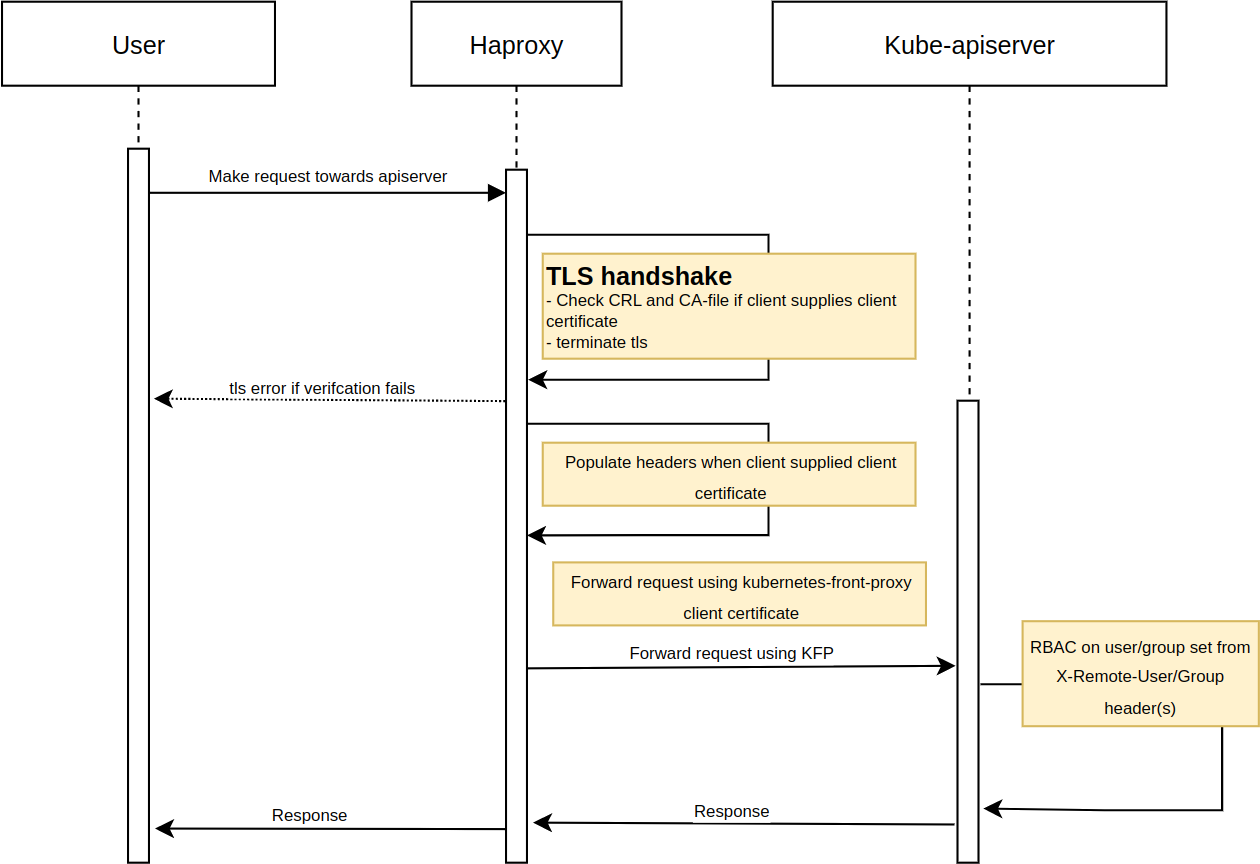

Diagram

For our Haproxy setup, things look like this:

Setting it up

The following certificates are reused:

| file | description |

|---|---|

/etc/kubernetes/pki/ca.crt |

One or more (intermediate) certificates that have signed client certificates (--etcd-cafile= and --client-ca-file= kube-apiserver options). |

/etc/kubernetes/pki/apiserver.pem |

Private key and Kubernetes apiserver certificate (concatenated), signed by the CA from /etc/kubernetes/pki/ca.crt (kube-apiserver gets them as --tls-private-key-file= and --tls-cert-file=). |

/etc/kubernetes/pki/crl.pem |

The certificate revocations. One CRL for each CA in ca.crt. |

/etc/kubernetes/pki/front-proxy-client.pem |

Holds the privkey and cert for the front-proxy-client, signed by the CA defined in /etc/kubernetes/pki/front-proxy-ca.crt. Required to trust headers in forwarded requests from haproxy to the Kubernetes apiserver. |

OBSERVE: Haproxy mandates that there is a CRL for every CA in ca.crt.

The kube-apiserver options used/altered:

--bind-address=127.0.0.1

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

--requestheader-allowed-names=front-proxy-client

--requestheader-username-headers=X-Remote-User

--requestheader-group-headers=X-Remote-Group

We make sure the Kubernetes apiserver is bound to 127.0.0.1 to prevent

bypassing the authenticating proxy (and in doing so bypassing the CRL

checks).

requestheader-allowed-names is used to limit the CN to the value

front-proxy-client and not allowing other CN’s.

The Haproxy configuration:

/etc/haproxy/haproxy.cfg

listen api-in

bind 10.66.66.66:6443 ssl crt /etc/kubernetes/pki/apiserver.crt verify optional ca-file /etc/kubernetes/pki/ca-int.crt ca-verify-file /etc/kubernetes/pki/ca.crt crl-file /etc/kubernetes/pki/crl.pem

mode http

http-request set-header X-Remote-User %{+Q}[ssl_c_s_dn(CN)]

http-request set-header X-Remote-Group %{+Q}[ssl_c_s_dn(O)]

default-server check ssl verify required ca-file /etc/kubernetes/pki/ca.crt crt /etc/kubernetes/pki/front-proxy-client.crt

server 127.0.0.1 127.0.0.1:6443

This is now the main entry point for all Kubernetes apiserver traffic. This means that users, but also applications like kubelet and kube-proxy will use this endpoint for accessing the API.

The Kubernetes apiserver runs on 127.0.0.1:6443. Haproxy runs on

the host IP (10.66.66.66) on port 6443. This way we can use Haproxy

as drop in replacement for API access.

Does it work?

Using a revoked certificate:

$ kubectl get nodes

Unable to connect to the server: remote error: tls: revoked certificate

Using a valid certificate:

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

node1.jedi.dostno.systems Ready zl 44d v1.24.8

Known backdoor(s)?

This setup prevents almost all possibilities to directly connect to the apiserver from, let’s say, a Kubernetes pod. All incoming traffic ends up at Haproxy running on the control plane nodes.

There is one obvious exception: a pod with host networking. When

pods are spawned with host networking they get the same network as

the host. And thus direct access to the apiserver at 127.0.0.1. To

prevent this you could implement some policies.

Kyverno is quite a useful tool for this.

On the other hand, you should take extra care with host networking pods anyway. If revoked certificates are getting through there, you probably have bigger issues…