Kubernetes: the new Gateway API

Transitioning K8S towards the Gateway API

We’ve heard the chatter about the retirement of the ingress-nginx ingress-controller. It seems to be a hot topic! We’ve had quite a few people ask:

“Hey OSSO, have you seen this already?"

Yes! We have!

Actually, we’re right on top of it. We plan to smoothly transition and adapt, and today we want to clarify OSSO’s approach going forward.

This post outlines the transition from the established Kubernetes Ingress resource to its successor Gateway API, detailing the reasons for the transition, our proposed migration path, and the key architectural concepts.

The Ingress Resource: End of an Era

The original Kubernetes Ingress resource (kubernetes.io/ingress/v1), while fundamental, has reached a point of stagnation in its development lifecycle. The resource is effectively considered “frozen” — that means no new functionality or significant enhancements will be added to its core specification.

The move toward the newer Gateway API is something we were already anticipating. However, the timeline was significantly accelerated by the “Ingress NGINX Retirement” announcement on November 11, 2025. Since OSSO — and by extension, many of you — implemented this solution widely, and it is now nearing the end of its support lifecycle, a timely migration is essential for the health of our Kubernetes infrastructure.

What is the Gateway API? (And do we really need it?)

Do we need it?

No, not necessarily. We could simply switch to another well-maintained ingress controller that supports the old spec.

However, at OSSO, we like to stay current with new developments. And this seems like the perfect moment not to dive into learning how another legacy ingress controller works (and how to troubleshoot it), but rather to embrace the future of routing Kubernetes traffic using the Gateway API.

The Gateway API is not merely a replacement for Ingress; it represents a redesign of how external and internal traffic routing is managed within Kubernetes clusters, with a strong focus on separation of concerns and extensibility.

In legacy Ingress, the Ingress object bundles multiple, often unrelated concerns:

- routing rules (paths and target services)

- TLS configuration (TLS secrets and hostnames)

- controller tuning (e.g.

nginx.ingress.kubernetes.io/proxy-read-timeout) - policy knobs (e.g.

nginx.ingress.kubernetes.io/cors-allow-origin)

A graph of the legacy Ingress resource would be misleadingly simple, like this:

Ingress

|

v

Service

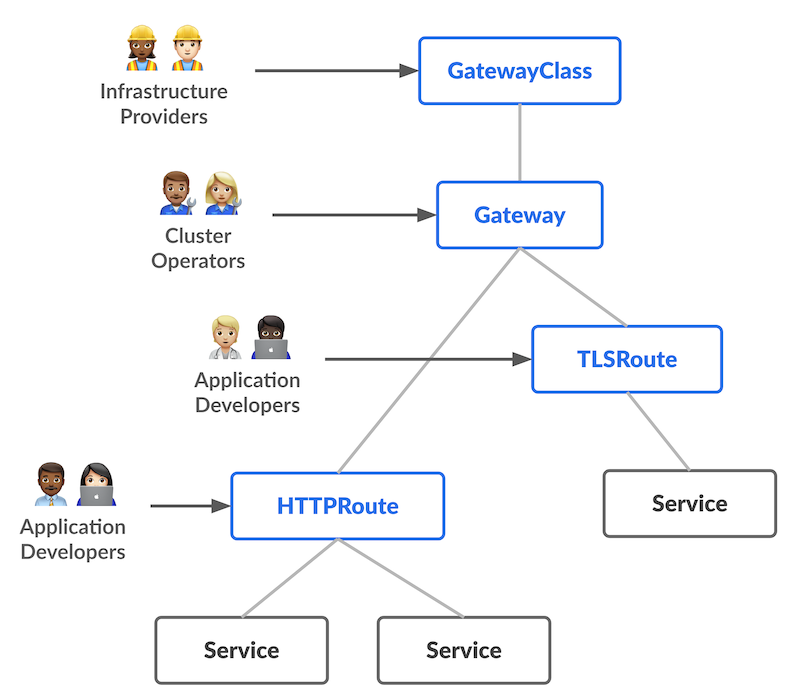

In contrast, the Gateway API decomposes these responsibilities into multiple, purpose-built resources:

The new resources in a nutshell:

- GatewayClass — defines which controller implementation is used;

- Gateway — defines where traffic enters the cluster (listeners, addresses)

- Route (HTTPRoute, TLSRoute, …) — defines how traffic is matched and forwarded

Role Separation

A core principle of the Gateway API is the clear outline of responsibilities among different personas:

-

Infrastructure Provider/Cluster Operator (OSSO): We manage the GatewayClass (defining the controller type) and deploy the Gateway resource. We handle the listener ports, IP addresses, load balancers, and other infrastructure-level concerns. This allows us to control where and how traffic enters the cluster, without defining application-level routing.

-

Application Developer (You): You create Route resources (e.g. HTTPRoute, TCPRoute, TLSRoute). These attach to a specific Gateway we provide and they define your application-specific routing rules, such as path matching, header manipulation, and backend service destinations. You can define routing behavior without modifying load balancer configuration, TLS listeners, or controller-level settings.

Having these responsibilities separated in different resources means the roles can edit them separately and avoid trampling on each other’s changes.

Clarity and Portability

The structured nature of the API resources provides greater clarity on the intended traffic flow. It promotes configuration portability across different cluster environments and gateway implementations, meaning your routing logic is less tied to the specific controller running behind the scenes.

Where do we go from here?

To make the transition to the Gateway API as seamless as possible, we are adopting a phased approach that closely mirrors our existing Ingress configuration.

1. Set up two main Gateways

- Public Gateway: Exclusively for external traffic arriving from public load balancers.

- Internal Gateway: Handles traffic confined to the private network (coming from inside the VRF or VPN).

2. Gradually move configurations

We will shift routing configurations from legacy Ingress resources to the new Gateway API resources (e.g. HTTPRoute, TCPRoute).

This familiar structure is beneficial because it aligns with our current traffic flow. It simplifies initial troubleshooting and provides ample time for thorough testing and validation of the new setup before we fully decommission the Ingress components.

Gateway API Implementation Options

The Gateway API is just a specification — it requires a specific implementation (a “Gateway Controller”) to enforce the rules. We’ve been doing our homework. Here is a look at our thought process:

-

Envoy Gateway

Envoy Proxy has historically been complex to set up. A couple of years ago, dynamic configuration required highly verbose management. Envoy Gateway changes this. It simplifies deploying Envoy as a data plane by using the standard Gateway API. It’s a minimalist, Envoy-native implementation that lowers the barrier to entry. -

Kgateway

Built on open source and open standards, Kgateway is an extensive implementation. Internally it’s also based on Envoy. It scales from lightweight micro-gateways to massive centralized gateways. While mature, we found it had some drawbacks, specifically:- It offers a massive feature set that many clusters simply don’t need.

- Initially, it lacked support for proxy-protocol (mandatory for our setups), though the GitHub issue tracker suggests this is being resolved: kgateway#11161 kgateway@c81a45b

-

Cilium Gateway API

Since many of our managed clusters run Cilium, this seemed convenient. However, not all clusters run it. We want to avoid maintaining multiple Gateway API implementations across our stack, so Cilium wasn’t the universal fit we needed. -

NGINX Gateway Fabric

Naturally, we investigated the official next-gen NGINX solution. However, after a Proof of Concept, we decided not to pursue this further. An Envoy-based solution simply fits our modern stack better.

Good to know is that Cilium, Envoy Gateway and Kgateway are all CNCF backed projects, giving us confidence in their long term availability.

The Verdict: We are leaning heavily toward an Envoy-based solution (likely Envoy Gateway or Kgateway) for its performance and CNCF backing. This is the way forward. And because Envoy Gateway and Kgateway are mutually interchangeable when using a minimal feature set, choosing either is fine.

Let’s get practical!

Enough theory. What does this actually look like for you, the developer?

If you are used to writing Ingress manifests, the change is significant but logical: instead of jamming everything into one resource, we split the “Infrastructure” from the “Routing”.

The Old Way (Ingress)

Currently, one might define an Ingress like this:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: tls-example-ingress

spec:

tls:

- hosts:

- foo.example.com

secretName: testsecret-tls

rules:

- host: foo.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: service1

port:

number: 80

The New Way (Gateway API)

In the new world, OSSO provides the Gateway. You don’t need to worry about ports or IPs; you simply define an HTTPRoute that attaches to our infrastructure.

A quick practical note: While this example combines the HTTP and HTTPS listeners for convenience, in practice, we plan to deploy two separate Gateways. This gives you the flexibility to attach your route to either the HTTP Gateway, the HTTPS Gateway, or both — whichever your setup requires.

1. The Gateway (Managed by OSSO)

You generally won’t create this, but it helps to know it exists. This is the location where the SSL certificates will be configured.

kind: Gateway

apiVersion: gateway.networking.k8s.io/v1

metadata:

name: http-and-https

namespace: envoy-gateway-public

spec:

gatewayClassName: envoy-gateway-class

listeners:

- protocol: HTTP

port: 8080

name: http

allowedRoutes:

namespaces:

from: All

- protocol: HTTPS

port: 8443

hostname: "*.example.com"

name: https-example.com

allowedRoutes:

namespaces:

from: All

tls:

mode: Terminate

certificateRefs:

- name: foo.example.com--tls

- name: bar.example.com--tls

2. The Route (Managed by YOU)

This is where the developer defines how traffic reaches your app.

Note the parentRefs section — that is where you plug the HTTPRoute into the Gateway.

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: foo.example.com

namespace: foo-example-ns

spec:

hostnames:

- foo.example.com

parentRefs:

- group: gateway.networking.k8s.io

kind: Gateway

name: http-and-https

namespace: envoy-gateway-public

rules:

- backendRefs:

- kind: Service

name: nginx

port: 8080

matches:

- path:

type: PathPrefix

value: /

Wrapping up

We know migrations are rarely anyone’s favorite task. That is why we are doing the heavy lifting on the infrastructure side.

As mentioned, during the migratory period the legacy Ingress and Gateway resources can co-exist.

We are here to help and assist with the actual migration or to discuss the best strategy for your specific setup. If you have questions or need a hand getting started, you know where to find us.

Sources & Further Reading

- The new standard:

- The Implementations:

- Background Context: Kubernetes Blog: Gateway API goes GA

- The one that started this flow of events: Ingress NGINX Retirement